Gather round the fire, kids, it's time for another Debugging Adventure! These are posts where I write up the process of diagnosing the root cause of a bug, where it turned out to be interesting (to me, anyway...)

This case - Bugzilla #1750575 - involved dnfdragora, the package management tool used on Fedora Xfce, which is a release-blocking environment for the ARM architecture. It was a pretty easy bug to reproduce: any time you updated a package, the update would work, but then dnfdragora would show an error "DNF is locked by another process. dnfdragora will exit.", and immediately exit.

The bug sat around on the blocker list for a while; Daniel Mach (a DNF developer) looked into it a bit but didn't have time to figure it out all the way. So I got tired of waiting for someone else to do it, and decided to work it out myself.

Where's the error coming from?

As a starting point, I had a nice error message - so the obvious thing to do is figure out where that message comes from. The text appears in a couple of places in dnfdragora - in an exception handler and also in a method for setting up a connection to dnfdaemon. So, if we didn't already know (I happened to) this would be the point at which we'd realize that dnfdragora is a frontend app to a backend - dnfdaemon - which does the heavy lifting.

So, to figure out in more detail how we were getting to one of these two points, I hacked both the points where that error is logged. Both of them read logger.critical(errmsg). I changed this to logger.exception(errmsg). logger.exception is a very handy feature of Python's logging module which logs whatever message you specify, plus a traceback to the current state, just like the traceback you get if the app actually crashes. So by doing that, the dnfdragora log (it logs to a file dnfdragora.log in the directory you run it from) gave us a traceback showing how we got to the error:

2019-10-14 17:53:29,436 <a href="ERROR">dnfdragora</a> dnfdaemon client error: g-io-error-quark: GDBus.Error:org.baseurl.DnfSystem.LockedError: dnf is locked by another application (36)

Traceback (most recent call last):

File "/usr/bin/dnfdragora", line 85, in <module>

main_gui.handleevent()

File "/usr/lib/python3.7/site-packages/dnfdragora/ui.py", line 1273, in handleevent

if not self._searchPackages(filter, True) :

File "/usr/lib/python3.7/site-packages/dnfdragora/ui.py", line 949, in _searchPackages

packages = self.backend.search(fields, strings, self.match_all, self.newest_only, tags )

File "/usr/lib/python3.7/site-packages/dnfdragora/misc.py", line 135, in newFunc

rc = func(*args, **kwargs)

File "/usr/lib/python3.7/site-packages/dnfdragora/dnf_backend.py", line 464, in search

newest_only, tags)

File "/usr/lib/python3.7/site-packages/dnfdaemon/client/__init__.py", line 508, in Search

fields, keys, attrs, match_all, newest_only, tags))

File "/usr/lib/python3.7/site-packages/dnfdaemon/client/__init__.py", line 293, in _run_dbus_async

result = self._get_result(data)

File "/usr/lib/python3.7/site-packages/dnfdaemon/client/__init__.py", line 277, in _get_result

self._handle_dbus_error(user_data['error'])

File "/usr/lib/python3.7/site-packages/dnfdaemon/client/__init__.py", line 250, in _handle_dbus_error

raise DaemonError(str(err))

dnfdaemon.client.DaemonError: g-io-error-quark: GDBus.Error:org.baseurl.DnfSystem.LockedError: dnf is locked by another application (36)</module>

So, this tells us quite a bit of stuff. We know we're crashing in some sort of 'search' operation, and dbus seems to be involved. We can also see a bit more of the architecture here. Note how we have dnfdragora/dnf_backend.py and dnfdaemon/client/init.py included in the trace, even though we're only in the dnfdragora executable here (dnfdaemon is a separate process). Looking at that and then looking at those files a bit, it's quite easy to see that the dnfdaemon Python library provides a sort of framework for a client class called (oddly enough) DnfDaemonBase which the actual client - dnfdragora in our case - is expected to subclass and flesh out. dnfdragora does this in a class called DnfRootBackend, which inherits from both dnfdragora.backend.Backend (a sort of abstraction layer for dnfdragora to have multiple of these backends, though at present it only actually has this one) and dnfdaemon.client.Client, which is just a small extension to DnfDaemonBase that adds some dbus signal handling.

So now we know more about the design we're dealing with, and we can also see that we're trying to do some sort of search operation which looks like it works by the client class communicating with the actual dnfdaemon server process via dbus, only we're hitting some kind of error in that process, and interpreting it as 'dnf is locked by another application'. If we dig a little deeper, we can figure out a bit more. We have to read through all of the backtrace frames and examine the functions, but ultimately we can figure out that DnfRootBackend.Search() is wrapped by dnfdragora.misc.ExceptionHandler, which handles dnfdaemon.client.DaemonError exceptions - like the one that's ultimately getting raised here! - by calling the base class's own exception_handler() on them...and for us, that's BaseDragora.exception_handler, one of the two places we found earlier that ultimately produces this "DNF is locked by another process. dnfdragora will exit" text. We also now have two indications (the dbus error itself, and the code in exception_handler() that the error we're dealing with is "LockedError".

A misleading error...

At this point, I went looking for the text LockedError, and found it in two files in dnfdaemon that are kinda variants on each other - daemon/dnfdaemon-session.py and daemon/dnfdaemon-system.py. I didn't actually know offhand which of the two is used in our case, but it doesn't really matter, because the codepath to LockedError is the same in both. There's a function called check_lock() which checks that self._lock == sender, and if it doesn't, raises LockedError. That sure looks like where we're at.

So at this point I did a bit of poking around into how self._lock gets set and unset in the daemon. It turns out to be pretty simple. The daemon is basically implemented as a class with a bunch of methods that are wrapped by @dbus.service.method, which makes them accessible as DBus methods. (One of them is Search(), and we can see that the client class's own Search() basically just calls that). There are also methods called Lock() and Unlock(), which - not surprisingly - set and release this lock, by setting the daemon class' self._lock to be either an identifier for the DBus client or None, respectively. And when the daemon is first initialized, the value is set to None.

At this point, I realized that the error we're dealing with here is actually a lie in two important ways:

- The message claims that the problem is the lock being held "by another application", but that's not what

check_lock() checks, really. It passes only if the caller holds the lock. It does fail if the lock is held "by another application", but it also fails if the lock is not held at all. Given all the code we looked at so far, we can't actually trust the message's assertion that something else is holding the lock. It is also possible that the lock is not held at all.

- The message suggests that the lock in question is a lock on dnf itself. I know dnf/libdnf do have locking, so up to now I'd been assuming we were actually dealing with the locking in dnf itself. But at this point I realized we weren't. The dnfdaemon lock code we just looked at doesn't actually call or wrap dnf's own locking code in any way. This lock we're dealing with is entirely internal to dnfdaemon. It's really a lock on the dnfdaemon instance itself.

So, at this point I started thinking of the error as being "dnfdaemon is either locked by another DBus client, or not locked at all".

So what's going on with this lock anyway?

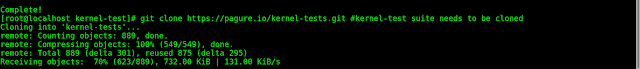

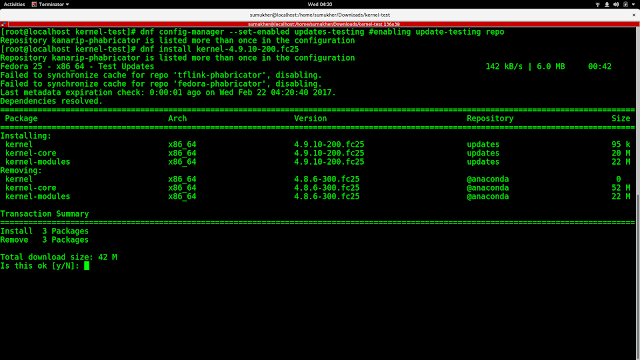

My next step, now I understood the locking process we're dealing with, was to stick some logging into it. I added log lines to the Lock() and Unlock() methods, and I also made check_lock() log what sender and self._lock were set to before returning. Because it sets self._lock to None, I also added a log line to the daemon's init that just records that we're in it. That got me some more useful information:

2019-10-14 18:53:03.397784 XXX In DnfDaemon.init now!

2019-10-14 18:53:03.402804 XXX LOCK: sender is :1.1835

2019-10-14 18:53:03.407524 XXX CHECK LOCK: sender is :1.1835

XXX CHECK LOCK: self._lock is :1.1835

2019-10-14 18:53:07.556499 XXX CHECK LOCK: sender is :1.1835

XXX CHECK LOCK: self._lock is :1.1835

[...snip a bunch more calls to check_lock where the sender is the same...]

2019-10-14 18:53:13.560828 XXX CHECK LOCK: sender is :1.1835

XXX CHECK LOCK: self._lock is :1.1835

2019-10-14 18:53:13.560941 XXX CHECK LOCK: sender is :1.1835

XXX CHECK LOCK: self._lock is :1.1835

2019-10-14 18:53:16.513900 XXX In DnfDaemon.init now!

2019-10-14 18:53:16.516724 XXX CHECK LOCK: sender is :1.1835

XXX CHECK LOCK: self._lock is None

so we could see that when we started dnfdragora, dnfdaemon started up and dnfdragora locked it almost immediately, then throughout the whole process of reproducing the bug - run dnfdragora, search for a package to be updated, mark it for updating, run the transaction, wait for the error - there were several instances of DBus method calls where everything worked fine (we see check_lock() being called and finding sender and self._lock set to the same value, the identifier for dnfdragora), but then suddenly we see the daemon's init running again for some reason, not being locked, and then a check_lock() call that fails because the daemon instance's self._lock is None.

After a couple of minutes, I guessed what was going on here, and the daemon's service logs confirmed it - dnfdaemon was crashing and automatically restarting. The first attempt to invoke a DBus method after the crash and restart fails, because dnfdragora has not locked this new instance of the daemon (it has no idea it just crashed and restarted), so check_lock() fails. So as soon as a DBus method invocation is attempted after the dnfdaemon crash, dnfdragora errors out with the confusing "dnf is locked by another process" error.

The crash was already mentioned in the bug report, but until now the exact interaction between the crash and the error had not been worked out - we just knew the daemon crashed and the app errored out, but we didn't really know what order those things happened in or how they related to each other.

OK then...why is dnfdaemon crashing?

So, the question now became: why is dnfdaemon crashing? Well, the backtrace we had didn't tell us a lot; really it only told us that something was going wrong in libdbus, which we could also tell from the dnfdaemon service log:

Oct 14 18:53:15 adam.happyassassin.net dnfdaemon-system[226042]: dbus[226042]: arguments to dbus_connection_unref() were incorrect, assertion "connection->generation == _dbus_current_generation" failed in file ../../dbus/dbus-connection.c line 2823.

Oct 14 18:53:15 adam.happyassassin.net dnfdaemon-system[226042]: This is normally a bug in some application using the D-Bus library.

Oct 14 18:53:15 adam.happyassassin.net dnfdaemon-system[226042]: D-Bus not built with -rdynamic so unable to print a backtrace

that last line looked like a cue, so of course, off I went to figure out how to build DBus with -rdynamic. A bit of Googling told me - thanks "the3dfxdude"! - that the trick is to compile with --enable-asserts. So I did that and reproduced the bug again, and got a bit of a better backtrace. It's a long one, but by picking through it carefully I could spot - in frame #17 - the actual point at which the problem happened, which was in dnfdaemon.server.DnfDaemonBase.run_transaction(). (Note, this is a different DnfDaemonBase class from dnfdaemon.client.DnfDaemonBase; I don't know why they have the same name, that's just confusing).

So, the daemon's crashing on this self.TransactionEvent('end-run', NONE) call. I poked into what that does a bit, and found a design here that kinda mirrors what happens on the client side: this DnfDaemonBase, like the other one, is a framework for a full daemon implementation, and it's subclassed by a DnfDaemon class here. That class defines a TransactionEvent method that emits a DBus signal. So...we're crashing when trying to emit a dbus signal. That all adds up with the backtrace going through libdbus and all. But, why are we crashing?

At this point I tried to make a small reproducer (which basically just set up a DnfDaemon instance and called self.TransactionEvent in the same way, I think) but that didn't work - I didn't know why at the time, but figured it out later. Continuing to trace it out through code wouldn't be that easy because now we're in DBus, which I know from experience is a big complex codebase that's not that easy to just reason your way through. We had the actual DBus error to work from too - "arguments to dbus_connection_unref() were incorrect, assertion "connection->generation == _dbus_current_generation" failed" - and I looked into that a bit, but there were no really helpful leads there (I got a bit more understanding about what the error means exactly, but it didn't help me understand why it was happening at all).

Time for the old standby...

So, being a bit stuck, I fell back on the most trusty standby: trial and error! Well, also a bit of logic. It did occur to me that the dbus broker is itself a long-running daemon that other things can talk to. So I started just wondering if something was interfering with dnfdaemon's connection with the dbus broker, somehow. This was in my head as I poked around at stuff - wherever I wound up looking, I was looking for stuff that involved dbus.

But to figure out where to look, I just started hacking up dnfdaemon a bit. Now this first part is probably pure intuition, but that self._reset_base() call on the line right before the self.TransactionEvent call that crashes bugged me. It's probably just long experience telling me that anything with "reset" or "refresh" in the name is bad news. :P So I thought, hey, what happens if we move it?

I stuck some logging lines into this run_transaction so I knew where we got to before we crashed - this is a great dumb trick, btw, just stick lines like self.logger('XXX HERE 1'), self.logger('XXX HERE 2') etc. between every significant line in the thing you're debugging, and grep the logs for "XXX" - and moved the self._reset_base() call down under the self.TransactionEvent call...and found that when I did that, we got further, the self.TransactionEvent call worked and we crashed the next time something else tried to emit a DBus signal. I also tried commenting out the self._reset_base() call entirely, and found that now we would only crash the next time a DBus signal was emitted after a subsequent call to the Unlock() method, which is another method that calls self._reset_base(). So, at this point I was pretty confident in this description: "dnfdaemon is crashing on the first interaction with DBus after self._reset_base() is called".

So my next step was to break down what _reset_base() was actually doing. Turns out all of the detail is in the DnfDaemonBase skeleton server class: it has a self._base which is a dnf.base.Base() instance, and that method just calls that instance's close() method and sets self._base to None. So off I went into dnf code to see what dnf.base.Base.close() does. Turns out it basically does two things: it calls self._finalize_base() and then calls self.reset(True, True, True).

Looking at the code it wasn't immediately obvious which of these would be the culprit, so it was all aboard the trial and error train again! I changed the call to self._reset_base() in the daemon to self._base.reset(True, True, True)...and the bug stopped happening! So that told me the problem was in the call to _finalize_base(), not the call to reset(). So I dug into what _finalize_base() does and kinda repeated this process - I kept drilling down through layers and splitting up what things did into individual pieces, and doing subsets of those pieces at a time to try and find the "smallest" thing I could which would cause the bug.

To take a short aside...this is what I really like about these kinds of debugging odysseys. It's like being a detective, only ultimately you know that there's a definite reason for what's happening and there's always some way you can get closer to it. If you have enough patience there's always a next step you can take that will get you a little bit closer to figuring out what's going on. You just have to keep working through the little steps until you finally get there.

Eventually I lit upon this bit of dnf.rpm.transaction.TransactionWrapper.close(). That was the key, as close as I could get to it: reducing the daemon's self._reset_base() call to just self._base._priv_ts.ts = None (which is what that line does) was enough to cause the bug. That was the one thing out of all the things that self._reset_base() does which caused the problem.

So, of course, I took a look at what this ts thing was. Turns out it's an instance of rpm.TransactionSet, from RPM's Python library. So, at some point, we're setting up an instance of rpm.TransactionSet, and at this point we're dropping our reference to it, which - point to ponder - might trigger some kind of cleanup on it.

Remember how I was looking for things that deal with dbus? Well, that turned out to bear fruit at this point...because what I did next was simply to go to my git checkout of rpm and grep it for 'dbus'. And lo and behold...this showed up.

Turns out RPM has plugins (TIL!), and in particular, it has this one, which talks to dbus. (What it actually does is try to inhibit systemd from suspending or shutting down the system while a package transaction is happening). And this plugin has a cleanup function which calls something called dbus_shutdown() - aha!

This was enough to get me pretty suspicious. So I checked my system and, indeed, I had a package rpm-plugin-systemd-inhibit installed. I poked at dependencies a bit and found that python3-dnf recommends that package, which means it'll basically be installed on nearly all Fedora installs. Still looking like a prime suspect. So, it was easy enough to check: I put the code back to a state where the crash happened, uninstalled the package, and tried again...and bingo! The crash stopped happening.

So at this point the case was more or less closed. I just had to do a bit of confirming and tidying up. I checked and it turned out that indeed this call to dbus_shutdown() had been added quite recently, which tied in with the bug not showing up earlier. I looked up the documentation for dbus_shutdown() which confirmed that it's a bit of a big cannon which certainly could cause a problem like this:

"Frees all memory allocated internally by libdbus and reverses the effects of dbus_threads_init().

libdbus keeps internal global variables, for example caches and thread locks, and it can be useful to free these internal data structures.

...

You can't continue to use any D-Bus objects, such as connections, that were allocated prior to dbus_shutdown(). You can, however, start over; call dbus_threads_init() again, create new connections, and so forth."

and then I did a scratch build of rpm with the commit reverted, tested, and found that indeed, it solved the problem. So, we finally had our culprit: when the rpm.TransactionSet instance went out of scope, it got cleaned up, and that resulted in this plugin's cleanup function getting called, and dbus_shutdown() happening. The RPM devs had intended that call to clean up the RPM plugin's DBus handles, but this is all happening in a single process, so the call also cleaned up the DBus handles used by dnfdaemon itself, and that was enough (as the docs suggest) to cause any further attempts to communicate with DBus in dnfdaemon code to blow up and crash the daemon.

So, that's how you get from dnfdragora claiming that DNF is locked by another process to a stray RPM plugin crashing dnfdaemon on a DBus interaction!

.jpg)

opensource.

opensource.